In previous blog posts, we showed that Pydantic is well suited to steering language models and validating their outputs.

The application of Pydantic extends beyond merely managing outputs of these text-based models. In this post, we present a guide on how to develop a product search API that uses Pydantic as a link between GPT-4 Vision and FastAPI. Pydantic will be used to structure both the data extraction processes as well as FastAPI requests and responses.

The combination of Pydantic, FastAPI, and OpenAI's GPT models creates a powerful stack for the development of AI applications, characterized by:

- Pydantic's Schema Validation: This feature guarantees the uniformity and adherence to predefined schemas across the application, an essential factor for managing outputs from AI models.

- FastAPI's Performance and Ease of Use: FastAPI serves as the optimal framework for crafting responsive APIs that can fulfill the requirements of AI applications. This is further enhanced by its seamless integration with Pydantic, which aids in data validation and serialization.

- OpenAI's GPT-4 Vision Capabilities: The inclusion of GPT-4 Vision introduces a layer of advanced AI intelligence, empowering applications with the ability to accurately interpret and analyze visual data.

Example: Ecommerce Vision API

We will develop a straightforward e-commerce vision application. Users will upload an image for processing, and the results could be forwarded to a product search API to fetch supplementary results. This functionality could enhance accessibility, boost user engagement, and potentially increase conversion rates. For the moment, however, our primary focus will be on data extraction.

from typing import List

from pydantic import BaseModel, Field

class SearchQuery(BaseModel): # (1)!

product_name: str

query: str = Field(

...,

description="""A descriptive query to search for the product, include

adjectives, and the product type. will be used to serve relevant

products to the user.""",

)

class MultiSearchQueryResponse(BaseModel): # (2)!

products: List[SearchQuery]

model_config = ConfigDict( # (3)!

json_schema_extra={

"examples": [

{

"products": [

{

"product_name": "Nike Air Max",

"query": "black running shoes",

},

{

"product_name": "Apple iPhone 13",

"query": "smartphone with best camera",

},

]

}

]

}

-

The

SearchQuerymodel is introduced to encapsulate a single product and its associated search query. Through the use of Pydantic'sField, a description is added to thequeryfield to facilitate prompting the language model -

The

MultiSearchQueryResponsemodel is created to encapsulate the API's response, comprising a list ofSearchQueryobjects. This model serves as the representation of the response from the language model. -

We define a

model_configdictionary to define the schema and examples for theMultiSearchQueryResponsemodel. This will be used to generate the API documentation and will also be included in the OpenAI prompt.

This output format not only guides the language model and outlines our API's response schema but also facilitates the generation of API documentation. Utilizing json_schema_extra allows us to specify examples for both documentation and the OpenAI prompt.

Crafting the FastAPI Application

After establishing our models, it's time to leverage them in crafting the request and response structure of our FastAPI application. To interacte with the GPT-4 Vision API, we will use the async OpenAI Python client.

from openai import AsyncOpenAI

from fastapi import FastAPI

client = AsyncOpenAI()

app = FastAPI(

title="Ecommerce Vision API",

description="""A FastAPI application to extract products

from images and describe them as an array of queries""",

version="0.1.0",

)

class ImageRequest(BaseModel): #(1)!

url: str

temperature: float = 0.0

max_tokens: int = 1800

model_config = ConfigDict(

json_schema_extra={

"examples": [

{

"url": "https://mensfashionpostingcom.files.wordpress.com/2020/03/fbe79-img_5052.jpg?w=768",

"temperature": 0.0,

"max_tokens": 1800,

}

]

}

)

@app.post("/api/extract_products", response_model=MultiSearchQueryResponse) #(2)!

async def extract_products(image_request: ImageRequest) -> MultiSearchQueryResponse: #(3)!

completion = await client.chat.completions.create(

model="gpt-4-vision-preview", #(4)!

max_tokens=request.max_tokens,

temperature=request.temperature,

stop=["```"],

messages=[

{

"role": "system",

"content": f"""

You are an expert system designed to extract products from images for

an ecommerce application. Please provide the product name and a

descriptive query to search for the product. Accurately identify every

product in an image and provide a descriptive query to search for the

product. You just return a correctly formatted JSON object with the

product name and query for each product in the image and follows the

schema below:

JSON Schema:

{MultiSearchQueryResponse.model_json_schema()}""", #(5)!

},

{

"role": "user",

"content": [

{

"type": "text",

"text": """Extract the products from the image,

and describe them in a query in JSON format""",

},

{

"type": "image_url",

"image_url": {"url": request.url},

},

],

},

{

"role": "assistant",

"content": "```json", #(6)!

},

],

)

return MultiSearchQueryResponse.model_validate_json(completion.choices[0].message.content)

-

The

ImageRequestmodel is crafted to encapsulate the request details for the/api/extract_productsendpoint. It includes essential parameters such as the image URL for product extraction, alongsidetemperatureandmax_tokenssettings to fine-tune the language model's operation. -

The

/api/extract_productsendpoint is established to process requests encapsulated by theImageRequestmodel and to return aMultiSearchQueryResponseresponse. Theresponse_modelattribute is utilized to enforce response validation and to facilitate the automatic generation of API documentation. -

A dedicated function is implemented to manage requests to the

/api/extract_productsendpoint. This function accepts anImageRequestas its input and produces aMultiSearchQueryResponseas its output, effectively bridging the request and response phases. -

Interaction with the GPT-4 Vision API is facilitated through the OpenAI Python client, employing the

gpt-4-vision-previewmodel for the purpose of extracting product details from the provided image. -

The

MultiSearchQueryResponsemodel'smodel_json_schemamethod is employed to construct the JSON schema that will be included in the prompt sent to the language model. This schema guides the language model in generating appropriately structured responses. -

To enhance the likelihood of receiving well-structured responses, the assistant is prompted to initiate its reply with

json, setting a clear expectation for the format of the output.

Running the FastAPI application

To run the FastAPI application, we can use the uvicorn command-line tool. We can run the following command to start the application:

uvicorn app:app --reload

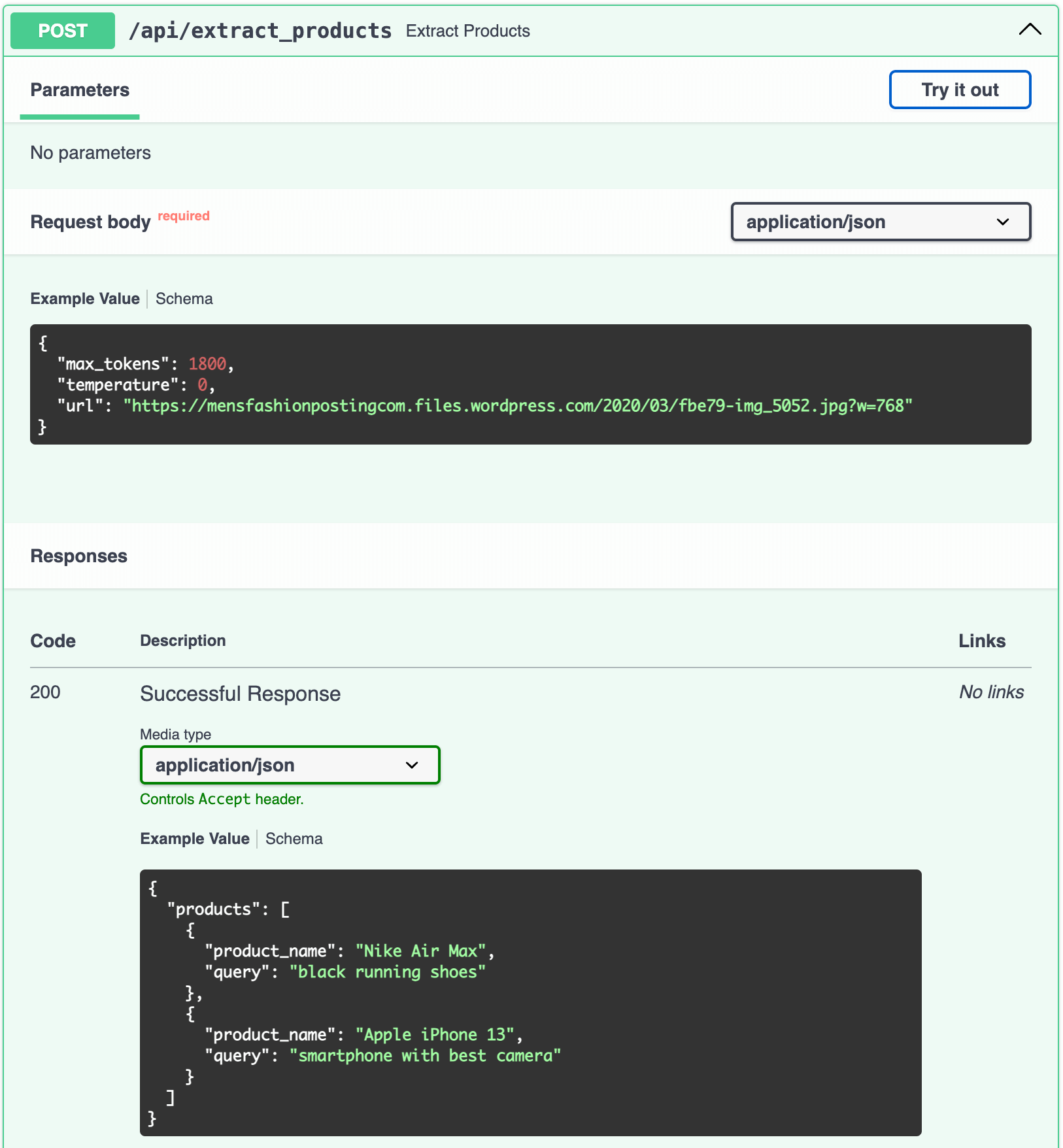

Visiting the documentation

Once the application is running, we can visit the /docs endpoint at localhost:8000/docs, and you'll notice that the documentation and examples are automatically generated as part of the Example Value

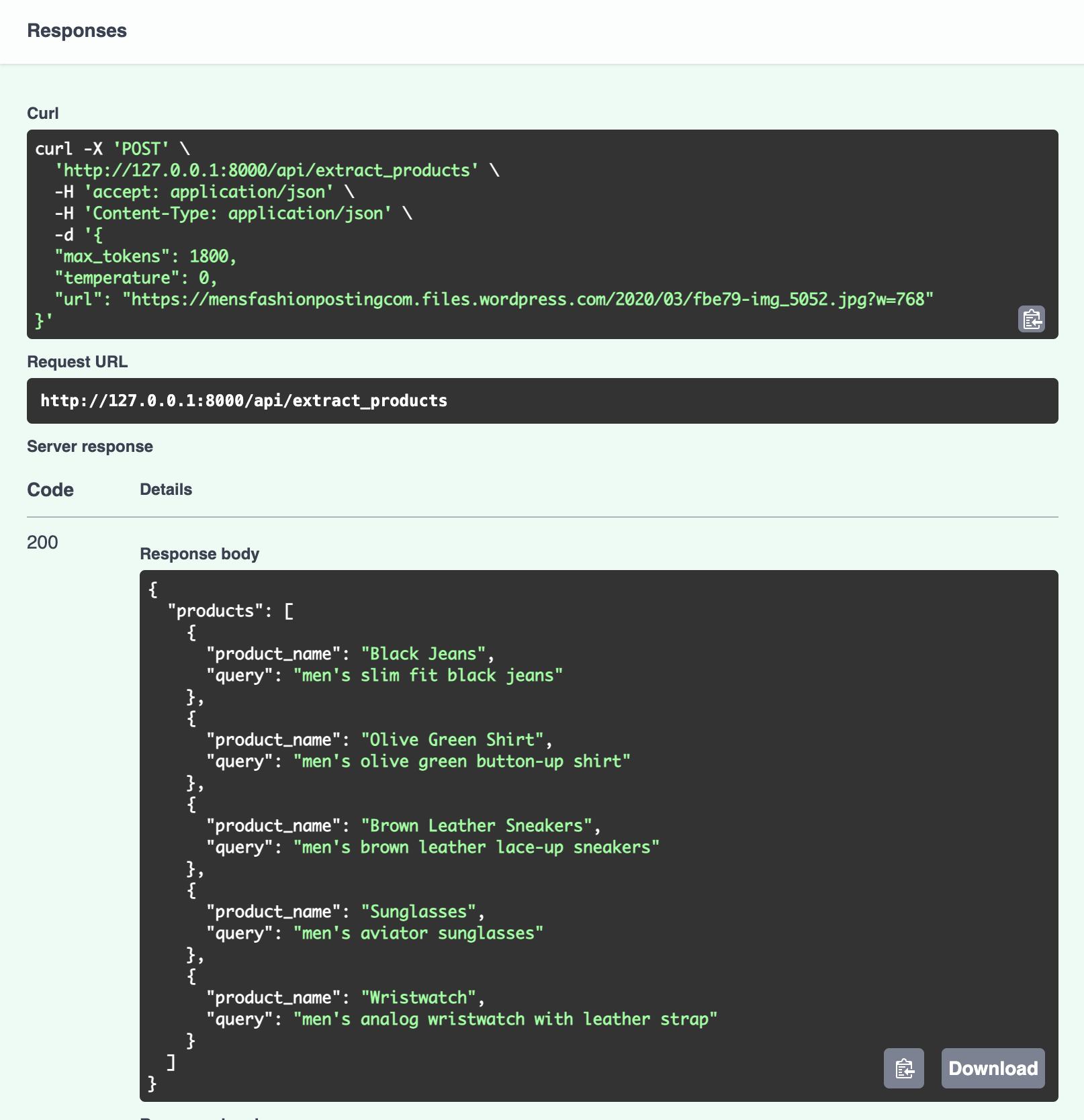

Testing the API

Once you hit 'Try it out' and 'Execute' you'll see the response from the language model, you'll see that the response is formatted according to the MultiSearchQueryResponse model we defined earlier.

Future of AI Engineering

With the increasing availability of language models that offer JSON output, Pydantic is emerging as a crucial tool in the AI Engineering toolkit. It has demonstrated its utility in modeling data for extraction, handling requests, and managing responses, which are essential for deploying FastAPI applications. This underscores Pydantic's role as an invaluable asset for developing AI-powered web applications in Python.