Q2 has wrapped up and we've been busy. Here's what we shipped recently.

🎯 Logfire Major Feature Releases

Rebuilt Dashboard Engine, New Standard Dashboards

We rebuilt Logfire’s dashboard system from scratch. Explore the new Dashboards Guide for faster performance, richer customization, and an intuitive monitoring interface. Plus, we've released Standard Dashboards alongside our existing Custom ones. These pre-built dashboards work out of the box — no SQL or configuration required. Use them as-is or copy them to build your own.

Logfire MCP Server Integration

The new Logfire MCP Server lets LLMs:

- Query logs, traces, and metrics

- Analyze distributed traces

- Run custom queries via Logfire’s OpenTelemetry-native API

Check out the demo video showing integration with Cursor, where you can ask your AI assistant questions like "are there any patterns in recent 404s?" while coding and watch it automatically write SQL queries against your live data and analyze the results for you.

Improved Evals Integration with Logfire

Pydantic Evals is implemented using OpenTelemetry to record traces of the evaluation process. These traces contain all the information included in the terminal output as attributes, but also include full tracing from the executions of the evaluation task function.

You can send these traces to any OpenTelemetry-compatible backend, including Pydantic Logfire.

Logfire now has some special integration with Pydantic Evals traces, including a table view of the evaluation results on the evaluation root span. Check out the docs for more information. Learn more about our comprehensive AI observability platform.

Convert to Org

We've now made it easy to convert your personal account into an organization, making it simpler to collaborate with a team and manage projects at scale. This is also handy for users upgrading to Pro, who might want to move projects under a corporate organization name.

Enhanced SQL Docs

Our expanded SQL Reference covers all key records in the Logfire data schema. Ideal for understanding the power of Logfire or to pass to your favorite LLM as context. This has been one of our most frequently requested bits of documentation, we hope you will find it useful.

🏢 Enterprise & Self-Hosting

Self-Hosted Deployments

Need full control over your observability stack? Our self-hosting docs guide you through Helm deployments—local or production—with battle-tested configuration examples. Self-hosted Logfire is already running in production at household-name organizations, with more companies currently onboarding. We’ve invested heavily in a streamlined deployment process (see our open-source Helm chart), and teams report that day-to-day maintenance is far less than expected. To discuss a rollout, email [email protected].

Trust & Compliance

See our Trust & Compliance page for details on SOC 2 Type II, HIPAA and GDPR compliance measures, and current security posture.

🛠️ Developer Experience

- VS Code Extension (beta) — Query logs, traces, and metrics without leaving your editor. Marketplace link

- Browser Integration — Capture client-side logs/traces with a lightweight JS snippet. Docs

- Saved Searches — Pin and share frequently used queries for consistent monitoring. Guide

- Delete project/org/account - for easy offboarding. We pride ourselves on no vendor lock-in, and this includes making it easy to leave if you're not happy with Logfire (we hope that won't be the case!)

📚 Documentation Updates

- Comprehensive JavaScript Docs - shows JS Logfire integration patterns with the browser, Next.js, Cloudflare and others.

- Concepts for observability beginners - covers logs, spans, traces and metrics.

- Gunicorn Integration — clearer setup & best practices

- Django ORM Guide — more examples & performance tips

- Billing Guide — transparent pricing & usage examples

- Dashboard query guide - explains how best to craft your Logfire dashboard queries.

- OTel Collector Guides - covers advanced OTel collector use cases like custom scrubbing

Pydantic AI Major Improvements

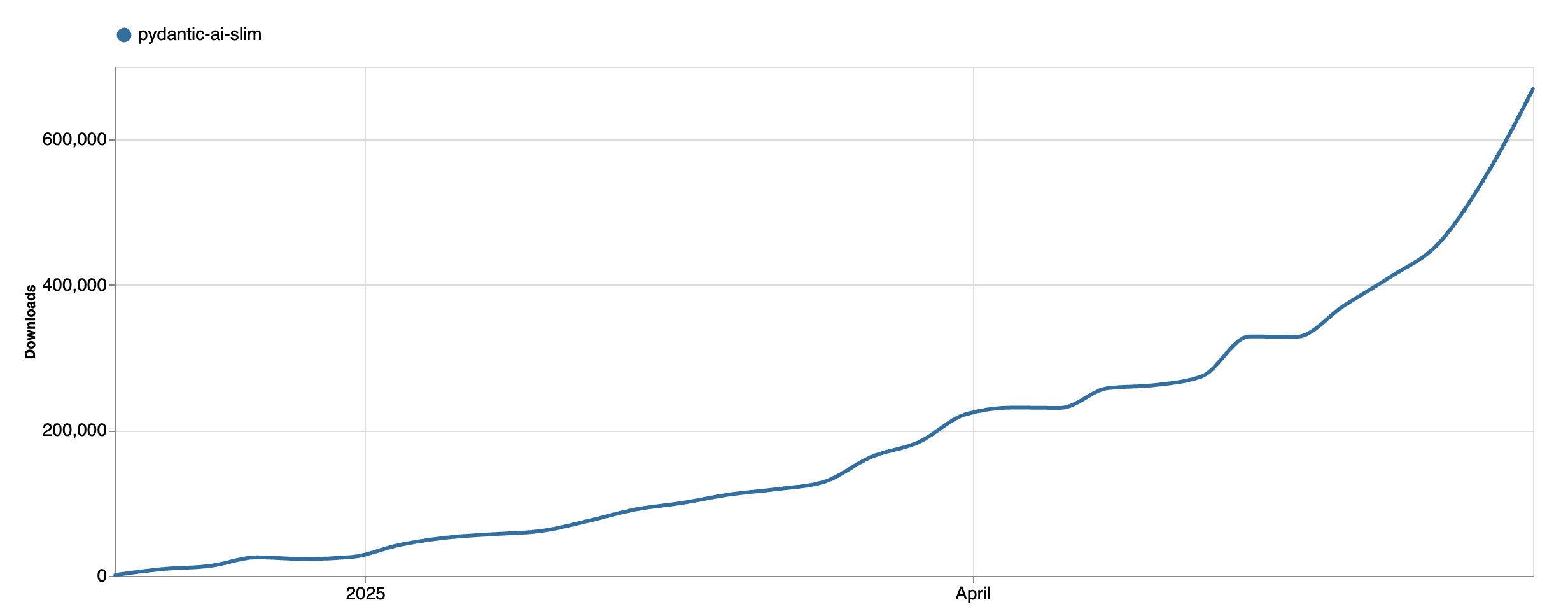

It's been great to see Pydantic AI (our AI agent framework) adoption continuing to grow. Weekly downloads

are now close to 700k:

The team and our (deeply appreciated) open-source contributors are constantly working to improve Pydantic AI. Here are some noteworthy releases, among many others:

- MCP sampling

NativeOutputandPromptedOutputmodes in addition toToolOutput- release notes.- Support for thinking parts

- Added A2A server

The plan is to release Pydantic AI v1 by roughly the end of July.

Pydantic Team Talks

- Samuel's talk at PyCon US: "Building AI Applications the Pydantic Way"

- Samuel's talk at AI Engineer: "MCP is all you need"

- Human-seeded Evals: Scaling Judgement with LLMs

- Our London meetup recording, with talks from MCP co-creator David Soria Parra, Jason Liu, OpenAI and Samuel. Our next London meetup will be on July 28th - stay tuned for updates!

Looking Ahead

We have big plans for the Pydantic stack and lots of exciting improvements in the works for the rest of 2025—join our Slack to be the first to hear about new features as we ship them.

Questions or Feedback?

We'd love to hear what you think! The best place to reach us is on slack, but we have lots of options you can find here.

p.s. We're hiring again.